Econ 4.0: Can AI be trusted?

15 Mar 2021

By Raju Chellam

I recently downloaded an AI (artificial intelligence) program to understand my likes so that it could send me the right recommendations. “I love to eat spicy Penang assam laksa, mee goreng mamak and ayam percik. I love to read technical and medical fiction. I love to visit the Perdana Botanical Garden and ride on the Funicular Railway on Penang Hill. I like sci-fi and biographical movies.”

I then queried the algorithm to check which words or phrases it did not understand. The program processed the pointers, digested the data, and reverted with a single query: “What is love?”

If that makes some of us smile, it may also make some of us sneer. That’s the paradox of AI — there are as many people who “love” it as there are ones who don’t. On the anti-AI side are people like Tesla CEO Elon Musk. In 2018 at the US National Governors Association, he said AI posed a fundamental risk to the existence of human civilisation. On the pro-AI side, Facebook CEO Mark Zuckerberg posted a video calling such negative talk “pretty irresponsible”.

Philosophy aside, most businesses are quite bullish about AI and are set to plonk US$110 billion on AI-related solutions and services by 2024 — up a whopping 193% over the US$37.5 billion they spent in 2019, according to market intelligence provider IDC. That is a compound annual growth rate (CAGR) of 25% between 2019 and 2024.

AI is a buzzword today. Simply put, AI is about getting computers to perform tasks or processes that would be considered intelligent if done by humans. For example, an autonomous car is not just making suggestions to the human driver; it is the one doing the driving.

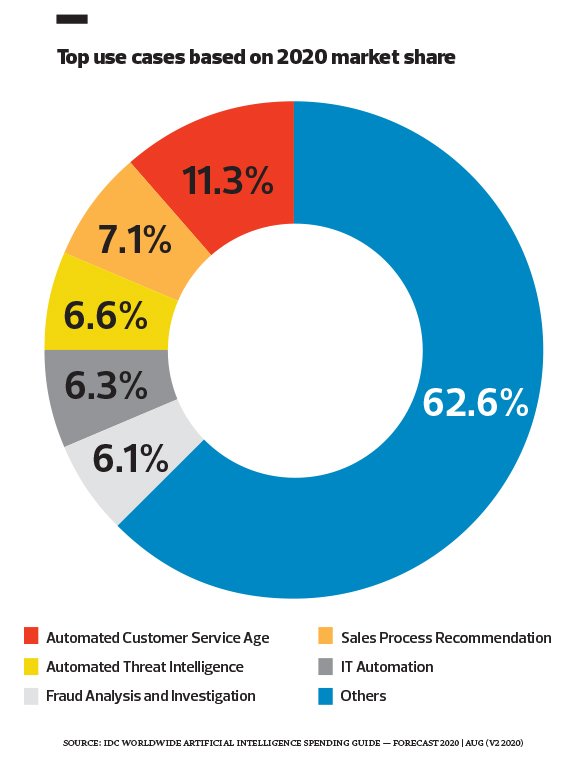

The two industries that will spend the most on AI solutions are retail and banking. “The retail industry will mainly focus its AI investments on boosting customer experience via chatbots and recommendation engines, while banking will focus on fraud analysis and investigation,” IDC says. “The industries that will see the fastest growth in AI spending between now and 2024 are media, federal or central government agencies, and professional services.” (See chart.)

In Malaysia, MIMOS, the national applied research and development (R&D) centre under the Ministry of International Trade and Industry, leads in AI. The others are Symprio, Fusionex, Atilze Digital, Crayon, Appier, Genaxis, Wise AI, SAS and Avanade Malaysia, according to RHA Media.

Fair and square

AI and its cousin ML (machine learning) will change human society in ways we have yet to imagine. AI applications now cut across many sectors — finance, healthcare, credit approval processes, insurance claims, transportation and human resources. It is embedded in home appliances and smart devices. It is the first time in human history that a machine can make decisions without human involvement. What if those decisions are biased? How can humans infuse ethics into AI algorithms? Can AI be trusted?

There have been a few attempts at governing AI in the US, the UK, Canada and the EU. For example, the Organisation for Economic Cooperation and Development (OECD) adopted the OECD Principles on AI. It identifies five principles: inclusive growth, sustainable development and well-being; human-centric values and fairness; transparency and explicability; robustness, security and safety; and accountability.

The most notable effort in the region is the AI Ethics and Governance Body of Knowledge (BoK). It was launched on Oct 16, 2020, as a result of a collaboration between the Singapore Computer Society and Infocomm Media Development Authority of Singapore. It has contributions from 30 authors and 25 reviewers and is split into 22 chapters in seven sections. Here’s the link: ai-ethics-bok.scs.org.sg.

S Iswaran, Singapore’s minister for communications and information, said at the launch that the world is accelerating towards a digital future with AI set to permeate all aspects of our lives. “Trust in digital technologies and AI is key if we are to scale up adoption,” he said. “Hence, it is crucial that the development and deployment of AI is human-centric — and safe.”

Some countries have spelt out their approach in dealing with AI. The US has issued guidelines that leave it to the industry to develop AI applications almost unregulated. The EU, on the other hand, believes in regulation, especially in areas it deems to be “high-risk”. There is some consensus among AI researchers that a balanced approach is needed where the rules are to protect both the public and promote industry innovation. Smart regulation will build trust among the public, as well as encourage innovation.

On a global stage, the World Economic Forum (WEF) launched the Global AI Action Alliance (GAIA) in late January to accelerate the adoption of inclusive, transparent and trusted AI. “AI holds the promise of making organisations 40% more efficient by 2035, unlocking an estimated US$14 trillion in new economic value,” the WEF notes. “But as AI’s transformative potential has become clear, so, too, have the risks posed by unsafe or unethical AI systems.”

Recent controversies on facial recognition, automated decision-making and Covid-19 tracking have shown that realising AI’s potential requires substantial buy-in from citizens and governments, based on their trust that AI is being built and used ethically.

“The GAIA is a new, multi-stakeholder collaboration platform designed to accelerate the development and adoption of AI tools globally and in industry sectors,” the WEF states. “GAIA brings together 100 leading companies, governments, international organisations, non-profits and academics united in their commitment to maximising AI’s societal benefits while minimising its risks.”

Trust bust

Early this year, Gartner released its “Predicts 2021” research reports, including one that outlines the serious, wide-reaching ethical and social problems it predicts AI will cause in the next several years. The report calls attention to the unintended results of new technologies, especially AI.

For example, Generative AI is now able to create amazingly realistic photographs of people and objects that don’t actually exist. “AI that can create and generate hyper-realistic content will have a transformational effect on the extent to which people can trust their own eyes,” Gartner’s report notes.

What can businesses do to mitigate these risks? “Establish criteria for responsible AI consumption and prioritise vendors that can demonstrate responsible AI and clarity in addressing related societal concerns,” Gartner advises. “Companies should also educate their staff on deep-fakes and generative AI. We are now entering a zero-trust world. Nothing can be trusted unless it is certified as authenticated using cryptographic digital signatures.”

Dr Yaacob Ibrahim, Singapore’s former minister of communications and information, wants technology to serve a higher purpose. “While technological solutions can be disruptive, it is up to governments and industry to soften the effects of the disruption as much as possible,” he notes.

Job displacement through automation and digitalisation has created anxiety among workers. There is, therefore, a need to account for this anxiety in how digital solutions are deployed. “Digital readiness for those affected — and where there are specific alternatives for them — should be one key consideration, Yaacob says. “Timing is essential. Technology should be deployed in a humane way that improves the quality of life of society at large.”

Is intelligence, whether artificial or human, an attribute reserved for higher consciousness? That’s the question I asked my newly downloaded AI program. Its smart-aleck reply: “Intelligence is like underwear. It is crucial that you have it, but not necessary that you show it off.”

The writer is vice-president of New Technologies for Fusionex International, Asia’s leading big data analytics company

Source: The Edge Markets